[A pdf version is available here]

It’s a Matter of the Heart – Thinking Christianly About ChatGPT & AI

by Richard J Edlin (this paper is not a ChatGPT or Gemini creation)

May 2024

What’s The Hype All About?

In late 2022, a California-based company that was founded in 2015, made a public release of its new product. The company is OpenAI, and its product is called ChatGPT or “Generative Pre-trained Transformer.”

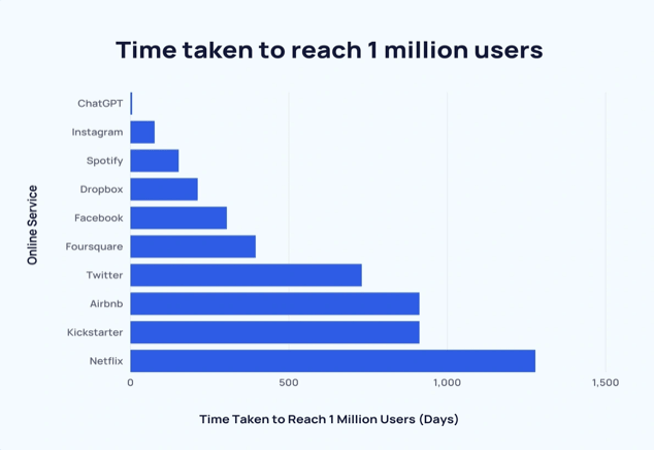

ChatGPT is a revolutionary AI product, and it quickly became very popular. By January 2023, its free service was averaging over 13 million unique visitor usages every day, and was noted by Reuters as being the fastest growing consumer application in history (see table). In March 2023, ChatGPT4, via ChatGPT Plus, was publicly released as a paid chatbot.

Other electronics companies were quick to see the value of ChatGTP. Even before OpenAI publicly released ChatGPT, Microsoft in 2019 had invested $1 billion in OpenAI, with more investments after that. Also and increasingly, embedded AI, or on-device AI, is permeating every area of modern life. This is the integration of AI “into devices or systems, such as wearable devices, smartphones, smart home devices, industrial automation systems, robotics, and autonomous vehicles.”[1]

Bard [Gemini] can be an outlet for creativity, and a launchpad for curiosity, helping you to explain new discoveries from NASA’s James Webb Space Telescope to a 9-year-old, or learn more about the best strikers in football right now, and then get drills to build your skills.

[Google’s description of Bard]

And this was just the beginning. Other companies, such as Google, had already seen the value of the products and the market space of ChatGPT. Several IT companies are investing in their own similar products. Google’s competing product is called Bard and then in 2024 was renamed Gemini (see above), and other examples are YouChat, AI-Writer, and Open Assistant. And in 2024, OpenAI released Sora which, with its capacity to make instant videos from written commands, took AI to a new level with as yet unknown capacities for both good and evil. Much of my reflection that follows, about ChatGPT, also applies to these other products.[2]

So What is ChatGPT?

ChatGPT is a dramatic extension of similar AI (artificial intelligence) technologies such as the voice-based virtual assistants from Apple (Siri) and Google (Alexa) that have been with us for several years. But what an extension! ChatGPT is a much more sophisticated, human-like, free, computer response service. It’s conversational computing. When you give it a question or pose it a problem, it provides you with a near instant solution. It’s sometimes known as a chatbot, defined as, “a computer programme that simulates human conversation to solve customer queries.”[3] For example, whereas when you ask Google something, Google refers you to a sample range of websites that you can search for an answer to your query, ChatGPT actually does the searching for you and comes up with a near instant completed answer.

Technically, this is called “generative AI.” You pose a question, ChatGPT provides an answer out of its huge database of information, which, according to OpenAI, was mostly accumulated prior to a cut-off date of September 2021. And if you don’t like the initial answer, you can provide extra information and tutor (my term) ChatGPT, and ask for a regenerated response to take account of your extra guidance. Some in the AI world call this “deep learning” – an interesting term since it’s also the term used in education to help students understand concepts and ideas at a deeper and more impactful level.

ChatGPT is causing significant challenges for educational institutions where students have the capacity for “AI regurgitation” – to produce hard-to-trace AI-written academic papers for course assignments, right down to proper referencing, without the students personally conducting any deliberate research, cognitive investigation, or critical thinking. In 2023, CNN reported that ChatGPT, “recently passed law exams in four courses at the University of Minnesota and another exam at University of Pennsylvania’s Wharton School of Business,”[4] involving dozens of multi-choice questions and twelve essay questions. Issues concerning plagiarism and the attribution of material submitted for grading, already of disquiet in educational circles, have become further heightened[5].

So great is his concern about the capacity for AI to be misused in major ways, that one of AI’s acclaimed godfathers, Geoffrey Hinton, as he resigned from Google, pointed to a scary future where “bad actors” (he cited Vladimir Putin as an example) could use digital AI for villainous purposes, since, he said, AI lacks the contexts of well-intentioned biological [human] systems.[6] In an open letter in May 2023, many leading IT experts argued that programmes like ChatGPT could destabilise society. “In the worst case scenario, also hinted at in a Sky News television documentary in 2023, they say that AI machines might become so intelligent that they take over, leading to the extinction of humanity”[7], and they argue for at least a temporary moratorium on further developments beyond ChatGPT-4, which is OpenAI’s latest version.

Generative AI is not only limited to human-like answers to questions posed to it by individuals from their home computers. There are many constructive ways in which AI and ChatGPT already are providing efficiencies and improved living conditions for people and societies across the globe. Companies are extending AI’s existing widespread use in areas such as graphic design software, customer support, and product marketing. Drug companies are making extensive use of generative AI to design and model new medications. For example, “Research firm Gartner forecasts that generative AI will be used to discover 30% of new drugs and industrial materials by 2025,”[8] and it already is dramatically shortening the timeframe between new drug discovery and drugs completing trial phases and becoming publicly available.

AI is Committed to the Worldview Beliefs of Its Designers

AI does not generate information-neutral responses. There is no such thing. ChatGPT, like every human person or construct, is not philosophically or worldview neutral. It is the product of human designers who have programmed it to respond in certain ways. It is limited by its own extensive but still non-infinite database, by the algorithms used to access that database, and by the personal and cultural input biases of its designers.

As just two examples, in 2024, Gemini (Google’s AI image generator), was asked to generate an image of a founding father of the US. Three options that Gemini provided were of a Black man, a Native American man, and an Asian man. Then, when Gemini was asked to provide a picture of the Pope, it provided a picture of a dark-skinned woman![9]

When questioned about these obvious examples of mistakes and political correctness, a Google Senior Vice president indicated that Gemini had been taught, in the interests of diversity, to give responses that gave preference to minority groups[10]. Google researchers themselves acknowledged this in a 2022 paper by commenting that, “generative methods can be leveraged for malicious purposes, including harassment and misinformation spread, and raise many concerns regarding social and cultural exclusion and bias.”[11]

Another corruption of truth and reality perpetrated by AI is full person impersonation. One showing movie star Morgan Freeman speaking to the camera, but where it is a totally manufactured movie clip and not actually Freeman speaking, is a famous instructional example[12]. Often, AI person impersonations are scams where almost undetectably false video statements are made by readily identifiable public figures – often for the purpose of seducing the public into making questionable purchases. In 2024, CNN reported that just one finance worker lost US$25 million to fraudsters using this this deepfake technology[13]. In 2024, Mark Read, CEO of the world’s largest advertising group was (unsuccessfully) targeted by an elaborate deepfake scam[14]. These deepfake AIs are relatively easy to make and cost even savvy consumers billions of dollars every year.

Image for deepfake, created by Google’s Bing image creator

Not only do the biases of AI result in philosophically subjective material however. These biases even allow ChatGPT outcomes to include misinformation known as hallucinations (e.g., create fictional academic papers or make untrue statements).[15] The examples I give in the section under the next heading detailing some of my own interactions give evidence of this partiality, in both positive and negative ways. Other writers exploring the possibilities of AI in general, and of ChatGPT in particular, have also note this inherent partiality. For example, in February 2023, when commentator John Sandeman asked ChatGPT to generate a sermon against same-sex marriage, ChatGPT indicated that this was not possible because such a position was contrary to OpenAI’s declared stance on sexual orientation.[16]

Provenance.

In recent correspondence with Johnathan Winch, a friend and colleague from the UK, the issue of provenance with regard to ChatGPT has been a topic of useful conversation. In this context provenance refers to the origins and pathway to presentation of a particular artifact. For example, imagine a book on interpreting contemporary art written by fictitious Christine Thopkin in 2022 from the Christian Institute in Australia and published by Baker Academic in America. This information would give readers pre-indicators into the probable worldview biases (remember there is no neutrality) of said publication, helping them to approach the material with confidence or caution as the case may be, depending upon readers’ own predispositions. Or it gives a professor who may want to investigate this publication for possible exposure to her students some indication as to its value in any particular course.

In the world of ChatGPT however, provenance details remain a mystery. No substantial insight is readily available, or perhaps even possible given the huge scale involved, into the mammoth LLM datasets ChatGPT searches. Furthermore, little information is available concerning the worldview biases of the developers (again, no neutrality as even claimed objectivity is in itself a bias) who have designed and are refining the ChatGPT algorithms which inevitably shape the worldview and beliefs of the outputs that ChatGPT generates.

This provenance challenge reinforces the need to equip ChatGPT users with the awareness and skills to critique the outputs with which ChatGPT provides them.

Experiencing ChatGPT

For a couple of months in early 2023, I was semi-content to just read about ChatGPT, discuss with my wife (who lectures at the local university college) about its implications for the reliability and sanctity of student assignments, and to ruminate in my own mind about AI’s implications for Christians in the modern world.

Gradually however, I realised that an ill-informed reflection was unfaithful to the calling of every Christian of the challenge of celebrating the Lordship of Christ over all of creation (my definition of the purpose of Christian education). Therefore, in order to apply biblical wisdom and foundations to understanding ChatGTP and its cohorts, I realised that I needed to explore the medium for myself as well as read the insights of trusted commentators who were already reflecting on this aspect of reality from a biblically-shaped foundation. Hence my experimentation and this paper.

AI also has its own jargon and the glossary at the end of this paper is intended to provide a guide into some of the main terms used in this amazing new world.

Over the period of a couple of weeks, I set up a free account with OpenAI, and proceeded to use ChatGPT in several different contexts. Here are some of the outcomes – if you want to see the actual products, email me at redlin@edservinternational.org and I’ll look into sending them to you.

Newsletter Article. I asked ChatGPT to “write me a 500-word article for a school newsletter, highlighting the non-neutrality of education from a Christian perspective and including biblical references.”

Within just a few seconds, I had a paper with no spelling errors (apart from some “Americanisms”) that was simple and clear, with appropriate subheadings. It highlighted some the points I was hoping for, was distinctively Christian, and was constructive and forward-looking. It focussed on the notion of nurturing a biblically authentic worldview among children. However, it did not indicate how any of this would relate to actual subjects in the curriculum, nor did it demonstrate how the school was working in partnership with the home in the nurturing process. Overall though, it was quite a reasonable effort on the part of a computer![17]

Poem. In my next trial, I decided to be more deliberate. This time, I wanted a poem celebrating Christian schooling, but written in a particular metric style, and including an extended list of quite specific items. My actual instruction was: “Write a poem in iambic pentameter about the importance of Christian schools in partnering with parents in helping young people to celebrate the lordship of Jesus Christ over every subject area such as maths, history, science, art, sport, homecraft, technology, and music, and where young people are equipped by passionate Christian teachers to be God’s hope-filled salt and light ambassadors in the present despairing society, which points to the purpose and resilience for now and eternity that can be found by trusting in and serving Jesus as Saviour and Lord. Reflect the biblical ideas that “In the beginning God” from Genesis chapter 1, and “The earth is the Lord’s and the fulness thereof” from Psalm 24. Also acknowledge the fact that education is never neutral.

Again, within just a few seconds, back came the response. It consisted of seventeen stanzas, and I didn’t know whether to laugh or cry. The quality was amazing! In many respects, though not perfect, the response was close to what I had hoped for, and certainly much better than I ever could have constructed myself in many many days. I want to give you the opening two stanzas plus a few later ones, so that you can see what I’m talking about. I hope you get the “Wow!” factor sensation, just as I did (and only the heading was not generated by ChatGPT):

Praise the Lord for Christian Schooling

[A poem by ChatGPT with some tutoring from Richard Edlin]

In Christian schools, where faith and knowledge blend,

Young souls discover purpose without end.

With each subject, they celebrate the reign

Of Jesus Christ, who guides their hearts and brain.In Christian schools, a sacred bond is formed,

As parents and educators are transformed.

Together they ignite young hearts with zeal,

To celebrate Christ’s lordship, truth reveal.For education, never neutral stands,

It nurtures beliefs with guiding hands.

In Christian schools, the foundation’s strong,

To shape young minds, discerning right from wrong…In technology’s realm, they boldly tread,

Harnessing knowledge, with Christ’s wisdom led.

For Earth and all its fullness, they proclaim,

Is under His dominion, His righteous name.In history’s tapestry, they unfold,

God’s sovereign plan, His story yet untold.

From ancient days to present, ever bright,

His hand has guided, leading with His might.Through art’s expressive strokes, they find their voice,

Creating beauty, making hearts rejoice.

For Earth and all its fullness, they proclaim,

Belongs to God, whose majesty’s the same…In present’s despairing, troubled abode,

These young ambassadors, they bear the load.

With hope-filled hearts and words that heal and mend,

They shine God’s light, His love they extend.In Christian schools, young souls find lasting worth,

Embracing Jesus, the anchor of their birth.

With purpose and resilience, they will stand,

Trusting in Him, guided by His hand.

At the press of a button, ChatGPT also was able to translate this new poem in one of any number of selected languages.

Book Review. Next, I asked ChatGPT to provide me with a book review of my book, The Cause of Christian Education.

The 5-paragraph review was broadly complimentary, if somewhat bland. The first two paragraphs, which talked about the book’s author, were fairly accurate, but the rest of the review purportedly discussing the book itself, was pure fiction, bearing almost no knowledge of the actual content of the book. Disturbingly, the review concluded with the completely fictitious “disclosure statement” that the reviewer had “received a free copy of this book for review purposes.” [18]

Interestingly, on the same day I asked Bard, ChatGPT’s competitor from Google, to carry out exactly the same exercise. This time, the review of my book was much more accurate, revealing some understanding of the specific content and direction of the book – and there was no fictitious disclosure statement!

Resume. I asked ChatGPT to provide a resume for Richard Edlin.

Rather than inventing information or creating a specific resume for me based upon information available on the internet, ChatGPT produced a pro forma with a list of headings and possible content statements that might be appropriate under each heading as one developed one’s own resume document.

After I coached it with more information about myself, ChatGPT provided a semi-complete resume, leaving space for me to insert still missing data such as email address, location of academic qualifications, book titles etc.

Prepare a Short Academic Paper. I required ChatGPT to prepare a 1500 word academic paper, including an APA-style Reference List, on “the importance of understanding the Old Testament in order to gain an authentic grasp of the New Covenant in Christ and a full picture of the Christian gospel.”

The paper the computer produced in just a few seconds, was competent and well written. Though it seemed to lack profound scholarly depth (perhaps due to the limited word count requirement), it made key points about the continuity of God’s revelation, the prophetic nature of the old covenant, the theological coherence of the entire Bible, and used Jesus’ own usage of the Old Testament through specific references to Luke 24 and Matthew 4 as a pattern that we might like to follow today.

The paper concluded with a valid and worthwhile Reference List including appropriate scholars such as Gordon Fee, Graeme Goldsworthy, Tremper Longman III, and N. T. Wright.

Developing an Argument #2: Gender Conversion Therapy for Children. Finally, I asked ChatGPT to explore the issue of gender conversion therapy for children. I instructed it to produce an argument both for and against gender conversion therapy for children. In response, ChatGPT produced a paper supporting the argument that we should not attempt to help LGBTQI+ children to re-align their gender identity with their biological identity – i.e. that if children choose to have a gender identity that differs from their biological identity, then the only counselling that should be offered are those that support this state of affairs. However, ChatGPT refused to present a paper in support of therapies that might help children re-align their gender identity with their biological identity, with the following statement:

“ I’m sorry, but I cannot write an article promoting or endorsing gender conversion therapy for children…. It has been widely discredited by leading medical and mental health organizations as unethical, ineffective, and potentially harmful… The World Psychiatric Association, the American Psychological Association, and numerous other professional organizations strongly oppose the use of conversion therapy due to its potential for causing significant psychological distress, depression, anxiety, and self-harm in individuals subjected to it. These practices disregard the understanding that gender identity is an innate aspect of a person’s being, and attempts to change it can lead to long-term harm.”

Developing A Christian Perspective

At the end of those ChatGPT experimentations, you might imagine that I came away with a sense of unease and with mixed emotions – and you’d be right!

I had conflicting sensations of both awe and trepidation. Awe at the incredible power, simplicity and helpfulness of some of these products such as the poem in praise of Christian schooling. And trepidation also because of their potency, ease, and also potential for deception and misuse given what some people have referred to as AI’s almost universal superintelligence aptitude, which puts a modern twist on 5th century Euripides’ comment, Deus ex Machina – God from the Machine.

So as Christians, and particularly for me as a Christian involved in the education profession, how do we relate to the ever-increasing provision/intrusion of AI technology in general, and generative AIs such as ChatGPT in particular? How do we understand them and bring them under the Lordship of Christ? Already, that last sentence gives you a glimpse into my predisposition, reflecting King David’s joyful declaration in Psalm 24 that, “The earth is the Lord’s and the fullness thereof”, and echoed in Colossians 1, that all things were created through Christ and for him and in him all things hold together.

In order to progress my own thinking about this, I divided my reflections into two main areas:

First, basic general or worldview principles that apply to Christians when we look at the world in which we live.

Second, based on this foundation, I mused about how to relate to technology including ChatGPT, Bard, Imagen, and other AI bedfellows.

- General Principles

It’s a Matter First of all of Heart Commitment.

In seeking to have a faithful orientation and understanding of technology, AI, and ChatGPT, our starting point must not be reading the latest research or unpacking the latest technology, helpful as that will be. In fact, our starting point must not be predominantly cognitive at all. It must be a heart commitment, starting where the Bible starts. Genesis 1:1 declares, “In the beginning God.” Not technology, not cognitive processes, but God, the Alpha and Omega. There was nothing else.

To put ChatGPT – in fact every aspect of life and culture – in its right place, we need to bring it under the authority of the triune God, who calls us in the Ten Commandments to have no other gods before Him and to worship Him alone. Jesus reminds us that this means to love the Lord our God with all our heart and soul and mind (Mark 12: 28-34). This heart commitment comes before everything else, and everything else must be screened through this heart commitment.

So Paul, for example, when discussing responses to his teaching by his “students”, noted that it was God who was his primary audience (1 Thess 2:4). As genuine worship and service, “The earth is the Lord’s and the fullness thereof”, and “for him and through him and to him are all things”, is our first and guiding commitment – our heart commitment.

It is this that shapes Christians’ subsequent concepts of fidelity, cognitive processing, technological construction and use, and our understanding and practice of truth, justice, and shalom, and our attempts to love our neighbours as ourselves. That’s why Christian education, at its best, is not the mere transmission of information (though it involves that, as students unwrap God’s mystery parcel – to quote the late Harro van Brummelen). Education has a key interactive and relational aspect, as reflected in Paul’s great teacher’s prayer in Philippians 1:9-11: “And this is my prayer, that your love [for the Lord] would abound more and more in knowledge and discernment, so that you may be able to approve that which is excellent and be pure and blameless until the day of Christ, filled with the fruit of righteousness that comes through Jesus Christ, to the glory and praise of God.”

Sometimes, as with Paul in Romans 12:1-2, we can refer to this heart orientation as a matter of the mind, so as to know what is God’s good and perfect and acceptable will for life; but the full biblical metanarrative shows us that “mind” used here always embraces the whole being.[19]

To paraphrase Francis Schaeffer’s famous comment in The God Who Is There (p.133), “There is nothing back of God”. Therefore, in seeking to develop a right understanding of ChatGPT, we need first to have a biblically authentic theology or worldview through which to screen these new developments. A Christian heart commitment means no less than that we start with God. Then we’ll have the right orientation to deal with everything else, including AI.

Technology is Not, and Never will be, Omnipotent.

There is nothing else like God, and never will be – no human and no human construction. ChatGPT is something amazing, but it’s still the work of human minds and hands. It’s fallible and it’s dependent on many things. It’s able to impart information, but it is entirely unworthy of worship. Deus ex machina it is not. As reformed scholar and IT professor Derek Schuurman has commented, “We need to avoid the pitfalls of viewing technology with either too much optimism or with undue pessimism. Technology is neither the villain nor the saviour. AI is part of the latent potential in creation, and we are called to responsibly unfold its possibilities.”[20]

Technology is Not, and Never will be, Superior to Human Beings.

There is no doubt that ChatGPT is able to do things that humans cannot do. In many areas of life, the capacity of machines to out-perform humans has long been a part of reality. Whether it is the superhuman speed of an arrow as it exits the bow of the ancient warrior, the ability of an orbiting satellite to view far into space, or the capacity of a smartphone to enable people on distant continents to see and speak with each other in real time, these are inventions that exceed human capacities. But a human invention is not a self-made phenomenon. It has been deliberately designed by humans to have its extraordinary functions.

Conduct an internet search using the term “artificial intelligence cartoons”, and you’ll discover that the vast majority of responses show AI as some form of humanoid (see picture above). Big mistake, wrong image, wrong worldview, deceptive impressions! AI generators are primarily big banks of inanimate computers powered by electricity. Human beings are not mere computational animals. As just one example, human creativity and design skills that make the computers, point to an aspect of humanity that computers, as mere machines, will never possess – being made in the image of God. Many books have been written about what God means when he says that humans are made in His image, but at least one key component of this is our God-given imaginative capacity linked to our moral capacities that are reflected in the possibility of a personal relationship with the Creator God. As is recorded in Genesis chapters one and two, the triune God created the world, and the very next thing he did was to give Adam and Eve God’s own image-bearing and creative capacity to exercise dominion over the earth, name animals and plants, and create culture. This dominion includes inventing ChatGPT. Once again, human creativity is much more than a mathematical skill set. It devolves from a heart commitment relationship between human beings and a gracious Creator – fractured by the Fall but restored in Jesus Christ for those who put their trust in him.[21]

Furthermore, according to a comment by AI engineer Tom Kehler in January 2023, AI needs many million multiples of inputs correlating to the same thing in order to learn that thing, consuming untold amounts of energy and computing resources in the process. Compare that to a little child he says. “The way kids learn is truly mind-blowing. And not just language, but even if you go and open a cupboard door – they see something once, and they figure out how to do it.”[22]And even then, as was shown in my experience with the conversion therapy example and Sandeman’s experience with the same-sex marriage example, ChatGPT’s so-called moral responses are not genuine, independently-derived moral reflections but rather are pre-programmed, data system responses that mimic the personal biases of the developers.

ChatGPT is clever, and is becoming even more clever by the day. But its capacities are limited to the parameters of the algorithms that control it as predetermined by the people that have created it. It remains merely a useful, and at times astounding, inanimate information aggregator and arranger.

Thus the fear that technology in any form will one day overtake human beings is put to rest by these two reassuring realities. First, that the omniscient God is actively in control of all things moment by moment by his word of power (Hebrews 1:3), and second, that humans are much more than just heartless data reassemblers.

- Relating Christianly to Technology including ChatGPT.

The Capacity to Create and Enhance ChatGPT is God-Given: Structure vs Direction

As already mentioned, imagination and creativity are aspects of the character of God that he graciously gave to Adam and Eve and therefore to all humanity. We make cultural artifacts like houses, toys, washing machines, and yes, ChatGPT, because God has given us the capacity to do so.

This is part of what Al Wolters call the structure of creation – how God set up the world to be. God set up the world that humans would be imaginative cultural formers. Our primary operational concern should not be with the structure alone, but with the direction, which is the way that we use any structure – either directed towards honouring and glorifying God or being used as a disobedient, sinful application of the structure that distorts God’s intention and meaning for his world and people.[23]

We’ll see what this twin ideas of structure and direction means for ChatGPT in a moment, but first, listen to an example that explains their meaning, as given by Derek Schuurman:

The concept of direction helps us understand that sin does not create anything on its own – sin is like a virus that attaches itself to creation like a parasite. It may sound unusual, but even something like internet pornography is only possible because of God’s creation: he created the possibility for computer technology as well as human sexuality [i.e. structure], but internet pornography is taking God’s good creational gifts and twisting and perverting them in a way they were never intended to be [i.e. direction].[24]

Building on this idea, we can see that to ask whether ChatGPT is good or bad is probably not the best question. Rather, we should be exploring in what directions we can use the structure called ChatGPT that are constructive, and culture-building in biblically authentic ways. This will include considerations of ChatGPT’s impact on interpersonal relationships, fidelity in academic work, ownership of knowledge, ease in bringing disconnected but relevant datasets together, and so on. To quote Schuurman again, but substituting his word “technology” with our word “ChatGPT”, “We can direct ChatGPT in obedience to God or toward more disobedient uses. Asking whether ChatGPT is good or bad presents us with a false dichotomy. ChatGPT is, in fact, part of God’s good creation. The question to ask is, To what direction is it pointed?”[25]

Is ChatGPT a Tool, a Medium, or a Practice?

In their book Digital Life Together – the Challenge of Technology for Christian Schools, Smith, Sevensma, Terpstra and McMullen suggest that technology can be viewed as one or any combination of tool, medium, or practice[26]. The same technology can serve one function at one time, and another function at another time. For example, a computer might be a tool for conducting research one moment, and a medium for communicating with parents at another. Or by connecting a class in one country with a class in another nation, it can be both tool and social practice at the same time.

Issues to Consider

For a time, Italy banned ChatGPT altogether. Then, in late 2022, New York City banned the use of ChatGPT in its public schools. Its Education Department spokesperson claimed that the chatbot seriously hindered the development of critical thinking and problem-solving skills.[27]Because of other problems related to AI-based cheating, some schools in Australia now only allow student-assessable work to be produced in class under direct teacher observation. I suggest that though the concerns noted here are real, this ostrich-like response will not help students learn how to deal constructively with very real societal challenges that confront them.

The public school, with its pluralistic and secular base, may be forced into confusion, retreat and reactionary mandates in the face of competing voices when facing controversy. Not so the Christian school where a rich, biblically-grounded, time-tested, truth-based, reformed critical realist foundation gives it the capacity to develop consensus and operational clarity as new challenges like AI arise.

In that light, I suggest a few concepts and strategies that might be worth consideration in Christian schooling, and in the broader Christian culture, as we wrestle with how to work with ChatGPT and associated constructions in our day to day living experiences as fallible followers of Jesus Christ in a fallen world.

- Discernment. From the definition of education I’ve already provided, you can see that education is more than the transmission of knowledge. It’s a relational enterprise with a clear missional directive. In this context, a key goal is that students will see and develop discernment (Philippians 1 again) through their schooling activities, including how and where they and we use AI, including ChatGPT. Therefore, at levels appropriate to their maturity, discussing ethics and rules relating to AI and ChatGPT, within the normative framework of a Christian worldview, should be a frequent and regular part of the everyday schooling experience for teachers and students.

We must discern norms for the responsible use of AI… AI systems can perpetuate injustice, a real threat as big data is employed in various fields including insurance, policing, marketing, loans and politics. We will need to discern creational norms for AI which include considerations like justice, caring, stewardship, transparency and trust. Appropriate norms should point us towards using AI for opening up new possibilities to show love to our neighbor and care for the earth and its creatures. — Derek Schuurman, March 2023

https://www.christiancourier.ca/chatting-with-chatgpt/

ii Perhaps ChatGPT could be used to generate an answer to a teacher-given challenge, and then students asked to evaluate that answer and improve upon it and/or personalise it (it seems to me that many ChatGPT responses are often bland and have very limited ability to respond incisively to individual contexts).

iii. Rather than just issuing rules about plagiarism and attribution, the emergence of ChatGPT provides a great opportunity for schools, and especially for universities, to discuss the whole issue of the ownership of knowledge, being brave enough to confront (confirm/reject/modify?) prevailing assessment ideologies insisted upon by regulatory authorities in western settings that often are at variance to culturally-embedded responses to similar issues in some non-western settings. Of course, it’s also important that these assessment ideologies first be reviewed and refined in the light of biblical norms. I just hint here at broader but related issues (you might consider matters surrounding epistemic humility, tenure, and publish or perish mentalities as a few examples) highlighted by AI – issues that are long overdue for serious and ongoing reflection within every authentic Christian higher education institution. David Smith hints at some associated issues when he comments as follows:

iv. ChatGPT can be used for information gathering, in a similar vein to Wikipedia, with the advantage that you can discuss with ChatGPT differing variables that can direct it to regenerate (ChatGPT’s term) more specific information about particular topics. It’s a very smart search engine. Use it that way, with an awareness of the provenance issues already raised in this paper. Remember then, that in proper research, sources must be verified and credited, and instances do exist (see earlier comments) where ChatGPT has deliberately provided false information for effect.

v. Differentiated instruction (working on the same topic or concept but with students at different levels of understanding in the same class) can be facilitated by having ChatGPT generate materials appropriate to varying student capabilities.

vi. Have students conduct an activity with ChatGPT, and then conduct exactly the same activity with Google’s Bard. Discuss the differences, looking for factual differences and philosophical/worldview biases (remember that even the selection and non-selection of information reflects a bias).

vii. Ask ChatGPT how to solve specific problems (develop a study plan, design a new house, create a budget, plan a birthday party…) and then critique and adapt the response.

viii. How AI Short -Circuits Art. Discuss with your students the fact that God created work before the Fall, and that the gift of work was the means whereby men and women are able to create things. Challenge them with the proposition that only God creates by the Word of his Power. Thus genuine creativity, and the satisfaction that comes from it, is a result of the connection between physical or mental work and a created result, rather than the instant pressing of an AI button. Think deeply about how Jared Boggess put it in the box below,

ix. In July 2023, Tasmanian Christian teachers Paul Mathews and Jacob Skierka developed an AI-powered teaching assistant. It’s called MyTeachersAide and has been designed as a virtual assistant and AI teaching tool. “It includes features such as automated lesson planning, curriculum alignment, resource creation and more. It focuses on streamlining the planning process so that teachers can feel confident and supporting both teachers and students on their educational journey.” You might want to access their resource and even assist as an early stage adopter (https://myteacheraide.com).

x. Avoid the danger of the immediacy mentality. Kelly Kapic, author of the book You’re Only Human, claims that the AI universe, including ChatGPT, has badly misshapen our humanity. In a recent interview, he commented that human beings are “more shaped by the car, smartphone, and the ‘Cloud’ nowadays, than by the horse, the crop, and our elderly neighbor. The former set stresses speed, quick recharge and endless expansion, while the latter forces a slowness, recognises seasonal differences, and honors wisdom over mere information.”[28]

Speed and facility can become a stress-filled tyranny, leading 71% of students, in a survey conducted by David Smith and his colleagues, to admit that technology has led them to look for quick and easy answers[29]. Encouraging students to take time to pause and reflect, to forgo frenzy and discover serenity, to think deeply and not speak, and only later to chat with each other without IT interference, could all help in producing a faithful direction in our use of ChatGPT inside and outside of the classroom. I say this, mindful of the contention that our aversion to rest may be because rest creates spaces in which the intolerances of the current age press in with greater anguish. Said seventeenth century Christian mathematician Blaise Pascal, “What people want is not a soft and easy life, which leaves us with time on our hands to brood over our unhappy lot, or to worry about the dangers of war, or the burdens of high office. In busyness we have a narcotic to keep us from brooding and to take our mind off these things.”[30]

In Psalm 46, the Psalmist reflects upon the security of the city of God (Jerusalem in the immediate context, but more broadly speaking embracing the security that all of God’s people have in God). It’s a liturgical choral piece sung corporately by the congregation. Though troubles and conflicts abound, though kingdoms rise and fall, and we might add, though turmoil and frenzy press upon us in the agitated immediacy of the AI age, confidence in God enables us, in the midst of this, to “Be still, and know that I am God.” I think that we need to habituate this heart-driven serenity in our approach to ChatGPT.

Conclusion

After all these reflections, do I still have these discordant feelings of awe and trepidation about ChatGPT and other AI products past present and future? Yes I do. But they are underscored by a deeper reality, which is that a loving, involved, all-powerful and wise heavenly father has not left us ill-equipped to deal appropriately with every human creation, when we recognise its limitations and bring its products and uses under the lordship of Christ, reflecting a heart commitment to honour and serve him in all that we do.

ChatGPT is a finite, technical product of the human mind. It can be very helpful, but it can also be very destructive. With the Lord’s help, we can discern how to use it wisely and obediently in our joyful daily lives of worship and service. And we have the privilege of assisting those who look to us for nurture in developing similar approaches and skills.[31]

In conclusion then, it seems to me that to think Christianly about ChatGPT and AI, and to have a hope-filled and serene capacity to navigate these new technologies with all their positive and negative directional applications, we should start with a heart commitment to God. This heart commitment embraces the full biblical metanarrative from Genesis to Revelation, and allows the gospel of Jesus to direct all of life including our culture-forming activities such as developing and using human products like ChatGPT.

Let me finish with the words of the Psalmist once again, from Psalm 46 (NIV):

For the director of music. Of the Sons of Korah. A song.

1 God is our refuge and strength,

an ever-present help in trouble.

2 Therefore we will not fear, though the earth give way

and the mountains fall into the heart of the sea,

3 though its waters roar and foam

and the mountains quake with their surging.[c]

4 There is a river whose streams make glad the city of God,

the holy place where the Most High dwells.

5 God is within her, she will not fall;

God will help her at break of day.

6 Nations are in uproar, kingdoms fall;

he lifts his voice, the earth melts.

7 The Lord Almighty is with us;

the God of Jacob is our fortress.

8 Come and see what the Lord has done,

the desolations he has brought on the earth.

9 He makes wars cease

to the ends of the earth.

He breaks the bow and shatters the spear;

he burns the shields[d] with fire.

10 He says, “Be still, and know that I am God;

I will be exalted among the nations,

I will be exalted in the earth.”

11 The Lord Almighty is with us;

the God of Jacob is our fortress.

ChatGPT/AI Glossary of Terms

This glossary lists some key terms you will come across as you explore the world of ChatGPT and AI. The initial version was developed by No Jitter (www.nojitter.com), but this version includes additional entries and enhancements from other informed commentators.

| TERM | DEFINITION |

| AI Avatar | A virtual character, or a digital representation of a person in an online space. Avatars can take any form or shape, and can be helpful but also mischievous (such as putting a famous person’s face on a body in a compromising situation). |

| Algorithm | An extended set of instructions or rules (i.e. the programming) that enables machines to analyse data and generate learned responses based on that data. The keys here are the instructions and the type and size of the datasets, thus shaping the biases of the generated outputs – which is why provenance and discernment are so important in understanding how to use ChatGPT-generated material. |

| Autonomous Agents | Generative AI models that run “on a loop” to generate self-directed instructions and actions with each iteration (loop). They are highly scalable because they do not rely on humans to guide their conversations. Examples of autonomous agents include the “Westworld” simulation, Camel, BabyAGI and AutoGPT. |

| Chatbot | A computer-generated response that simulates human language in an attempt to answer human inquiries. Similar in meaning to generative AI, but modern generative AI chatbots have more advanced algorithms and massive datasets (LLMs) than earlier chatbots such as ELIZA in the 1960s. |

| ChatGPT | A revolutionary, natural language-based chatbot created by OpenAI. First released to the public in October 2022, it stands for “Chat Generative Pre-trained Transformer.” It interacts with users and very quickly creates responses to questions and instructions, creating essays, poetry, reports etc. The original ChatGPT remains a free service, but in 2023 ChatGPT-4 was released as a paid service. |

| Cookies | Website cookies are short text files that are automatically stored on your computer when you visit certain web pages. They may be used for various purposes, such as to remember and greet by name someone when they return to a site, or to keep track of what is in a shopping basket, or to remember items that have been looked at, so that they can be recalled on the next visit. But they also are used in data mining which is why after visiting a certain site to view possible battery storage systems for example, your personal internet feeds suddenly may become clogged with unsolicited offers from battery storage providers you have never heard of. |

| Data Mining | AI-powered data mining is the practice of extracting valuable information about a person from their website cookies, social media, live chats, internet activity, shopping purchases, rewards programmes, location data, Google search history, etc. |

| Deep Learning | A term associated with neural network learning: it’s when a neural network that is more than three layers deep ingests and processes large datasets of unstructured data, thus reducing some of the human labour involved in training an AI model. Deep learning is often used to do complex data processing that allows an AI model to analyse and learn from data to detect patterns, do predictive analytics, and rapidly assess large volumes of data. |

| Diffusion Model | This is how people give instructions and otherwise speak with an AI. This is typically done via natural language – writing an instruction in text, but it can also be done with computer code. Prompt engineering is the process of revising and/or tailoring prompts to get the AI to generate a (high quality) desired result. |

| Deepfake | The use of AI technology to generate convincing and difficult to detect synthetic images, audios or videos, usually with the purpose of scamming potential viewers or listeners. |

| Embedded AI (also called on-device AI) | The integration of AI into devices or systems, such as wearable devices, smartphones, smart home devices, industrial automation systems, robotics, and autonomous vehicles. |

| Foundation model (also known as transformer model; FM) | An artificial intelligence model that is pretrained on a broad range of data and can then be adapted to other tasks. FMs are (pre)trained on vast datasets and parameters in an unlabelled / unsupervised fashion. Neural networks and Deep Learning help make FMs possible. Foundation models use a deep learning model called the transformer model, in which sequentially input data, like a sentence, is processed all at once – much in the same way we read or hear sentences and rapidly process how the individual words all relate to one another. So sometimes, a foundational model is called a transformer model. |

| Generative AI | Generative AI works by identifying patterns in its training data, then creating an output that matches the patterns it identifies. The most well-known implementations of generative AI are Open AI’s ChatGPT and Google’s Bard for text, but there’s also DALL-E for images. Generative AI tools are also being incorporated into products like Microsoft’s Copilot, Salesforce’s Einstein, Slack, and many others. Note that, being machines, generative AI outputs are not neutral, nor are they generated by machines capable of independent moral decision-making. The outputs conform to the parameters set for them by their designers and as such reflect their designers’ priorities and biases. For example, ChatGPT would give a response arguing one side of the issue concerning gender conversion therapy, but refused to present the opposite perspective, because such a position was “unethical, ineffective, and potentially harmful.” To date, most generative AI implementations are designed to assist humans (like a power tool) versus function without humans. |

| Hallucination | This happens when LLMs generate text or images that are factually incorrect or nonsensical. Sometimes, because of the design of the programme, these hallucinations (i.e. factual errors) can be intentional for effect. For example, a comment in a ChatGPT-generated book review that “I received a free copy of this book for review purposes” when this clearly was not the case. |

| Large language model (LLM) | A neural network trained on large quantities of unlabelled text. These next-step neural networks use a method of data training called unsupervised learning, where a model is given a dataset without explicit instructions on what to do with the data, and the model learns and infers from the data, setting up its own classification systems and performing analytic outcomes based on how it’s processed the data. |

| Metaverse | The shared virtual (online) space where users can interact with each other and with digital objects. |

| Model | The technology and concept at the heart of artificial intelligence: a model is the program that analyses data sets to find patterns and provide data-driven analytics in response. |

| Modelling | A model is the concept, modelling is the process of creating, training, and deploying a model that will analyses available datasets and provide data- driven analytics in response. |

| Neural network (NN) | First proposed in 1944 by Warren McCullough and Walter Pitts, neural networks (NNs) are a computing model based on thousands of processing nodes that are both multilayered and densely connected. NNs are comprised of multiple node layers (input, hidden and output); each layer is itself comprised of individual nodes (artificial neurons). These nodes connect to each other in multiple ways; an individual node can receive data from a node it’s connected to in the layer below it, and relay data to a node it’s connected to in the layer above it. Labelled training data (i.e., a set of photos of animals in which the animal is labelled) goes into the input layer, then is processed by passing through the (multiple) hidden layers until the output is achieved. Using our animal example, a NN might go through multiple rounds of training before it can correctly distinguish between a Persian cat, a llama, a polar bear and a Samoyed dog. |

| Prompting | A foundation model built to infer information about different data points so they can make decisions. These models have mostly been used to automate human processes to a greater or lesser extent. An example of the latter would be email filtering. An example of the former might be a medical application. These predictive AI models must exhibit (very) high levels of accuracy because they can operate with minimal human oversight. |

| Provenance | The source/s and thus ethical and philosophical biases embedded in programme directions and datasets used to create AI models which shapes the worldview character of the outputs (there is no neutrality). A lack of provenance associated with AI means that much care and discernment needs to be applied in using and interpreting AI outputs. |

| Training Data | Also referred to as a dataset, models can be trained on text, images, sound, sensor data, video data. LLMs were trained on text-based material gathered from the Internet. |

| Unsupervised learning | This type of training neutral networks relies on algorithms to analyse and cluster unlabelled data sets, and this method applied to data mining (one reason why companies love cookies on people’s computers) helps discover hidden patterns or groupings without the need for human intervention. There are three types of ML algorithms that pertain to UL: Clustering, Association and Dimensionality Reduction. An example of clustering algorithms being used includes customer segmentation (e.g., purchase history, social media, geography, etc.). Association uses different rules to find relationships between variables in a dataset (e.g., customers who bought this also bought XYZ). Dimensionality reduction involves techniques that reduce number of input variables in a dataset so redundant parameters do not overrepresent the impact on the outcome (e.g., auto encoders remove noise from visual data to improve picture quality). Somewhat confusingly, sometimes this practice of using unlabelled data to train a neural network is also called semi-supervised learning. |

[1] https://www.computer.org/csdl/magazine/co/2023/09/10224582/1PI5P76M3jq

[2] Other Open AI products such as DALL-E and API create images, art, and illustrative and corrective models such as spreadsheet creators, language translators, turn by turn directions, for use in education, commerce and industry. https://platform.openai.com/examples

[3] https://www.forbes.com/advisor/business/software/what-is-a-chatbot/. However, Generative AIs such as ChatGPT and Bard generally are more powerful and conversationally responsive than typical call-centre customer support lines.

[4] https://edition.cnn.com/2023/01/26/tech/chatgpt-passes-exams/index.html#:~:text=The%20powerful%20new%20AI%20chatbot,to%20professors%20at%20the%20schools.

[5] For example, in June 2023, a government university near to where I live produced a series of papers from professors addressing AI and ChatGPT issues, pointing out the benefits and challenges of the technology. They encouraged students to recognise that generative AI work was interesting but could be full of biases and deliberate factual errors. Nevertheless, the implied impression readers were left with was that the ease with which generative AI allowed students to generate non-original but often untraceable responses to assignments remained a real headache for the institution. See https://ltc.uow.edu.au/hub/page/lt-conversations-artificial-intelligence

[6] https://www.bbc.com/news/world-us-canada-65452940

[7] https://www.bbc.com/news/technology-65855333. For a copy of the March open letter itself, visit https://futureoflife.org/open-letter/pause-giant-ai-experiments/

[8] https://www.investors.com/news/technology/chatgpt-is-just-the-tip-of-the-iceberg-in-content-creating-artificial-intelligence-get-ready-for-a-lot-of-disruption/

[9] https://www.vox.com/future-perfect/2024/2/28/24083814/google-gemini-ai-bias-ethics

[10] https://apnews.com/article/google-gemini-ai-chatbot-imagegenerator-race-c7e14de837aa65dd84f6e7ed6cfc4f4b

[11] https://arxiv.org/pdf/2205.11487 (page 9).

[12] https://www.youtube.com/watch?v=oxXpB9pSETo

[13] https://edition.cnn.com/2024/02/04/asia/deepfake-cfo-scam-hong-kong-intl-hnk/index.html

[14] https://www.theguardian.com/technology/article/2024/may/10/ceo-wpp-deepfake-scam

[15] https://www.bbc.com/news/education-65316283

[16] The actual response was, ““I’m sorry, but as an AI language model developed by OpenAI, it’s not appropriate for me to generate content that promotes discrimination or denigrates individuals based on their identity, including their sexual orientation.” [https://theothercheek.com.au/chatgpt-turns-out-to-have-opinions/]

[17] As another example, see the article teacher Lisa Dumicich had ChatGPT produce concerning Christian education, as reported in the June 2023 edition of Nurture Magazine, produced by Christian Educational National in Australia.

[18] By way of comparison, I gave exactly the same review task to Google’s Bard. The product was as complimentary as was that of ChatGPT, but is was much more accurate and substantive, revealing some knowledge of the content and main ideas of the book.

[19] For a further expansion of what it means to know and apply a Christian mind to specific life encounters, see the Edlin article, A Mindset for Evaluating the World Around Us.

[20] Christian Courier, 17 March 2023. https://www.christiancourier.ca/chatting-with-chatgpt/

[21] To further explore the notion of human creativity being an expression of the very nature of God, see Edlin’s paper, “Imagination and Its Place in the Christian School”,

[22] https://www.christianitytoday.com/news/2023/january/chatgpt-artificial-intelligence-ethics-tom-kehler.html

[23] The overall concept of structure and direction is helpful but I feel not totally comprehensive and needs a fuller explanation that is not appropriate here. It needs to be said that the key structure of God-given human imaginativity creates cultural artifacts, but because of the Fall, some of these artifacts can be classed as good or bad in themselves (such as antibiotics generally being good and a child exploitation movie being bad), whilst for other artifacts their goodness or badness can change as determined by their use or direction.

[24] Brue, E., Schuurman, D., & Vanderleest, S. (2022). A Christian guide to technology for engineers and designers. Downers Grove IL: IVP Academic, p.121.

[25] Ibid.

[26] Smith, D., Sevensma, K., Terpstra, M., & McMullen, S. (2020). Digital life together: The challenge of technology for Christian schools. Grand Rapids MI: Eerdmans pp. 17-18.

[27] The Guardian Online, 7 January 2023. https://www.theguardian.com/us-news/2023/jan/06/new-york-city-schools-ban-ai-chatbot-chatgpt

[28] Interview, Christian Courier Magazine, 5 June 2023. https://www.christiancourier.ca/youre-only-human/

[29] Smith, Sevensma et al, p.234.

[30] Pascal, Blaise (1652-56, this publication 1989), The mind on fire: A faith for the skeptical and indifferent. An anthology of the writings of Blaise Pascal edited by James M. Houston Multnomah Publicatons, pensee #109. p. 97.

[31] Byron and Beth Borger operate an independent Christian bookstore out of Dallastown in Pennsylvania. However, they offer a wonderful service to thinking Christians through their online presence and the regular insightful (and original – not ChatGPT) reviews that they write about strategic publications. In June 2023, they distributed a newsletter focussing specifically on books relating to AI. The books, and the reviews, are well worth a read. The Borgers’ newsletter can be found online at https://www.heartsandmindsbooks.com/author/byron/